Pam Bondi vs. the Senate: Round Two.

Source link

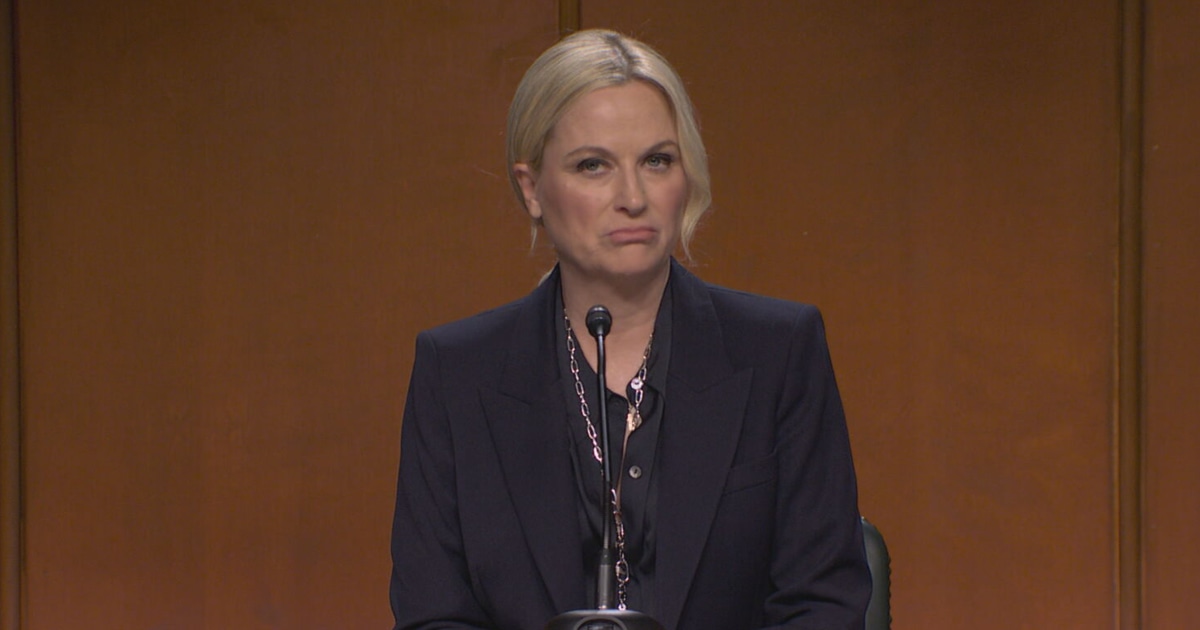

Oct. 12, 2025, 1:54 AM EDTBy Phil HelselPam Bondi vs. the Senate: Round Two. That was the scenario envisaged by “Saturday Night Live” on Saturday, with alum Amy Poehler portraying the attorney general in a follow-up to her combative hearing with Democrats this week.Asked how President Donald Trump could justify deploying National Guard troops against Americans, Poehler’s Bondi was confrontational.”Before I don’t answer, I’d like to insult you personally,” Poehler’s Bondi responded.Fellow former cast member Tina Fey made a surprise appearance as Homeland Security Secretary Kristi Noem, toting an assault-style rifle and making a pitch for applicants to become Immigration and Customs Enforcement officers that included questions like, “Do you need a job now?” and “Do you take supplements that you bought at a gas station?””Then buckle up and slap on some Oakleys, big boy: Welcome to ICE,” Fey’s Noem said.Poehler, a seven-year “SNL” cast member who left in 2008 to go on to “Parks and Recreation” fame, hosted for the third time Saturday.Her appearance came on the 50th anniversary of “Saturday Night Live,” which premiered Oct. 11, 1975.”It’s always a dream come true to be here. I remember watching the show in the ’70s, sitting in my house in Burlington, Massachusetts, thinking: ‘I want to be an actress someday — at least until they invent an AI actress who’s funnier and willing to do full-frontal,'” Poehler said in her monologue.She also had a message of hope for those who may feel overwhelmed. “If there’s a place that feels like home, that you can go back to and laugh with your friends, consider yourself lucky — and I do,” she said.And she had the last laugh against her imagined AI doppelgänger. “And to that little AI robot watching TV right now who wants to be on this stage someday, I say to you: Beep, boop, beep, boop beep beep,” Poehler said. “Which translates to: You’ll never be able to write a joke, you stupid robot! And I am willing to do full-frontal, but nobody’s asked me, OK?”Another skit had a cameo by Aubrey Plaza, a former intern and guest host on “SNL” who also starred on “Parks and Recreation.”In a parody of Netflix’s “The Hunting Wives” — introduced as “the straight but lesbian horny Republican murder drama” — Plaza played “a new new girl” who joined the group. After a sexually charged lesson in how to make a mimosa, Plaza revealed she had a girlfriend, prompting the other women to shout, “lesbian!” and immediately pull their guns on her.The reunion did not end there. A “Weekend Update” anchor trio of Seth Meyers, Fey and Poehler, who have all been behind the desk, joined current hosts Colin Jost and Michael Che for a quiz show-style battle.Role Model was Saturday’s musical guest. His performance of “Sally, When The Wine Runs Out” featured an appearance by Charli XCX. At the end of the episode, “SNL” paid tribute to Oscar-winning actor Diane Keaton, showing a portrait. Keaton died at the age of 79, her daughter said earlier Saturday.Sabrina Carpenter, who recently released the album “Man’s Best Friend,” is the host and musical guest of next week’s episode. “SNL” airs on NBC, a division of NBCUniversal, which is also the parent company of NBC News.Phil HelselPhil Helsel is a reporter for NBC News.